tl;dr: Training a network to classify images (with a single label) is modeled as a sequential decision problem where actions are salient locations in the image and tentative labels. The state (full image) is partially observed through a fixed size subimage around each location. The policy takes the full history into account compressed into a hidden vector via an RNN. REINFORCE is used to compute the policy gradient.

Although the paper targets several applications, to fix ideas, say we want to classify images with one label. These can vary in size but the number of parameters of the model will not change. Taking inspiration from how humans process images, the proposed model iteratively selects points in the image and focuses on local patches around them at different resolutions. The problem of choosing the locations and related classification labels is cast as a reinforcement learning problem.

Attention as a sequential decision problem

One begins by fixing one image x and selecting a number T of timesteps. At each timestep t=1,…,T:

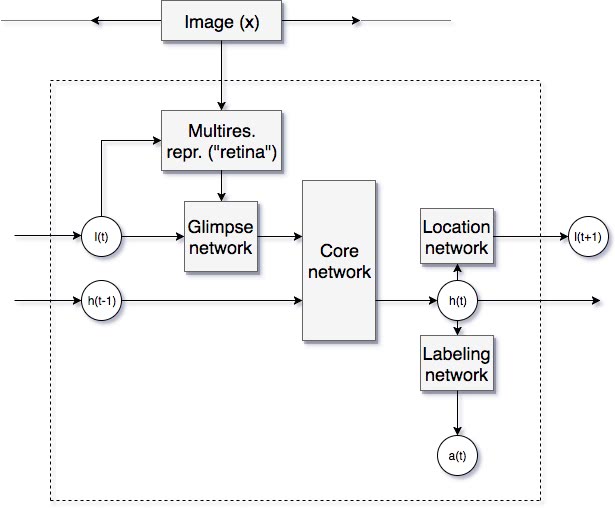

- We are in some (observed) state st∈S: it consists of a location lt in the image (a pair of coordinates), and a corresponding glimpse xt of x. This glimpse is a concatenation of multiple subimages of x taken at different resolutions, centered at location lt, then resampled to the same size. How many (k), at what resolutions (ρ1,…,ρk) and to what fixed size (w) they are resampled are all hyperparameters.1 The set of all past states is the history: s1:t−1:={s1,…,st−1}.

- We take an action at=(lt,yt)∈A, with the new location lt in the (same) image x to look at and the current guess yt as to what the label for x is. In the typical way for image classification with neural networks, yt is a vector of “probabilities” coming from a softmax layer. Analogously, the location lt is sampled from a distribution parametrized by the last layer of a network. The actions are taken according to a policy πtθ:St→P(A), with St=S×t−1⋯×S, and P(A) the set of all probabilty measures over A. The policy is implemented as a neural network, where θ represents all internal parameters. The crucial point in the paper is that the network takes the whole history as input, compressed into a hidden state vector, i.e. the policy will be implemented with a recurrent network. Because parameters are shared across all timesteps, we drop the superindex t and denote its output at timestep t by πθ(at|s1:t).

- We obtain a scalar reward rt∈{0,1}. Actually, the reward will be 0 at all timesteps but the last (T), where it can be either 0 if yT predicts the wrong class or 1 if it is the right one.2

The model at timestep t.

Note that the policy πθ(at|s1:t) has two “heads”, a labeling network fy, outputting a probability of the current glimpse belonging to each class and a location network fl. Only the output of the latter directly influences the next state. This is important when computing the distribution over trajectories τ=(s1,a1,…,sT,aT) induced by the policy:

pθ(τ):=p(s1)T∏t=1πθ(at∣st)p(st+1∣st,at)=p(s1)T∏t=1p(lt∣fl(st;θ))p(st+1∣st,lt).

The goal is to maximise the total expected reward

J(θ):=Eτ∼pθ(τ)[T∑t=1r(st,at)].

The algorithm used is basically the policy gradient method with the REINFORCE rule:3

Algorithm 1:

- Initialise πθ with some random set of parameters.

- For n=1…N, pick some input image xn with label yn.

- Sample some random initial location l0.

- Run the policy (the recurrent network) πθ for T timesteps, creating new locations lt and labels yt. At the end collect the rewardrT∈{0,1}.

- Compute the gradient of the reward ∇θJ(θn).

- Update θn+1←θn+αn∇θJθ(θn).

The difficulty lies in step 5 because the reward is an expectation over trajectories whose gradient cannot be analitically computed. One solution is to rewrite the gradient of the expectation as another expectation using a simple but clever substitution:

∇θJ(θ)=∫∇θpθ(τ)r(τ)dτ=∫pθ(τ)∇θpθ(τ)pθ(τ)r(τ)dτ=∫pθ(τ)∇θlogpθ(τ)r(τ)dτ,

and this is

∇θJ(θ)=Eτ∼pθ(τ)[∇θlogpθ(τ)r(τ)]

In order to compute this integral we can now use Monte-Carlo sampling:

∇θJ(θ)≈1MM∑m=1∇θlogpθ(τm)r(τm),

and after rewriting logpθ(τ) as a sum of logarithms and discarding the terms which do not depend on θ we obtain:

∇θJ(θ)≈1MM∑m=1T∑t=1∇θlogπθ(amt|sm1:t)rm,

where rm=rmT is the final reward (recall that in this application rt=0 for all t<T). In order to reduce the variance of this estimator it is standard to subtract a baseline estimate $b =\mathbb{E}_{\pi{\theta}} [r{T}]$ of the expected reward, thus arriving at the expression

∇θJ(θ)≈1MM∑m=1T∑t=1∇θlogπθ(amt|sm1:t)(rm−b).

There is a vast literature on the Monte-Carlo approximation for policy gradients, as well as techniques for variance reduction.4

Hybrid learning

Because in classification problems the labels are known at training time, one can provide the network with a better signal than just the reward at the end of all the process. In this case the authors

optimize the cross entropy loss to train the [labeling] network fy and backpropagate the gradients through the core and glimpse networks. The location network fl is always trained with REINFORCE.

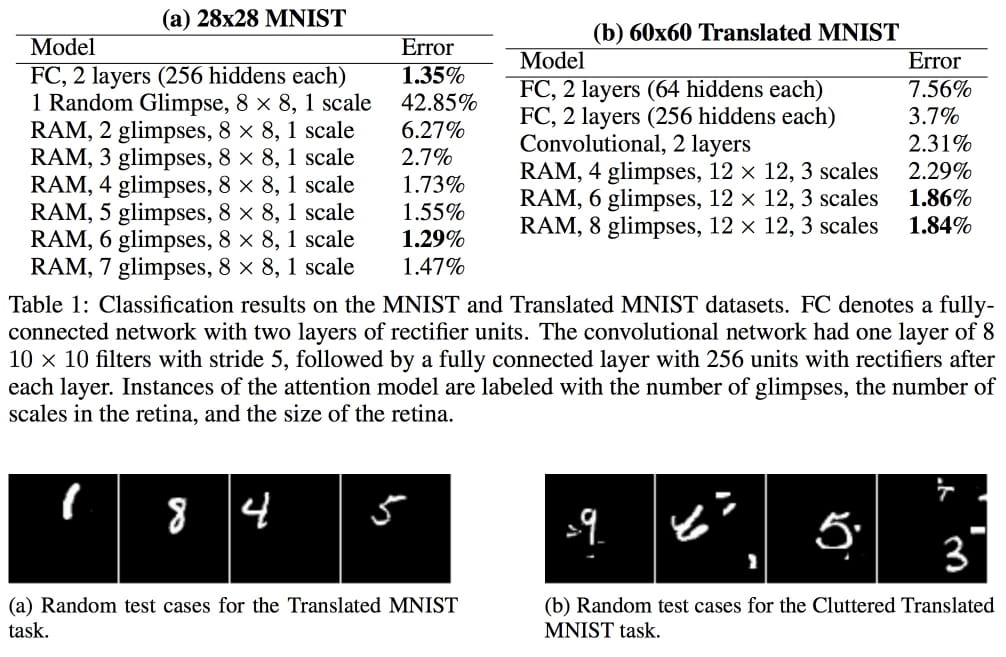

Results for image classification

An image is worth a thousand words:

- It would be nice to try letting a CNN capture the relevant features for us, instead of fixing the resolutions. I’m sure this has been tried since 2014. ⇧

- I wonder: wouldn’t it make more sense to let rt∈[0,1] instead using the cross entropy to the 1-hot vector encoding the correct class? ⇧

- See e.g. Reinforcement Learning: An Introduction, (2018) , §13.3. ⇧

- Again, see Reinforcement Learning: An Introduction, (2018) . ⇧